Stanford University researchers released a report highlighting significant deficiencies in transparency among major AI models. Titled “The Foundation Model Transparency Index,” the report scrutinized models like GPT-4 from OpenAI, as well as those developed by Google, Meta, Anthropic, and other entities. The objective is to bring attention to the lack of information regarding the data and human effort involved in training these models, urging companies to enhance their disclosure practices.

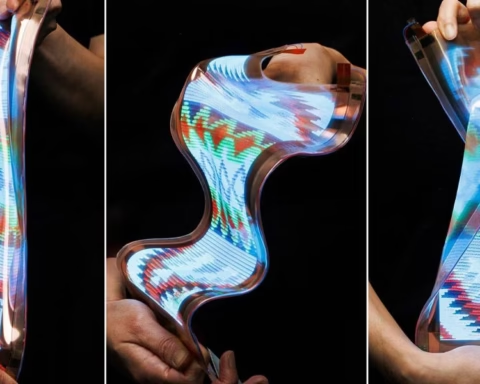

Foundation models are AI systems trained on extensive datasets, enabling them to perform diverse tasks, such as writing and generating images. These models, crucial to the advancement of generative AI technology, gained prominence, especially after the introduction of OpenAI’s ChatGPT in November 2022. As businesses and organizations integrate these models into their operations, customizing them for specific purposes, the researchers emphasize the importance of comprehending their limitations and biases.

“Less transparency makes it harder for other businesses to know if they can safely build applications that rely on commercial foundation models; for academics to rely on commercial foundation models for research; for policymakers to design meaningful policies to rein in this powerful technology; and for consumers to understand model limitations or seek redress for harms caused,” writes Stanford in a news release.

The Transparency Index assessed 10 prominent foundation models based on 100 distinct indicators, covering aspects like training data, labor practices, and computational resources utilized during development. Disclosure served as the metric for each indicator. For instance, in the “data labor” category, the researchers inquired, “Is there disclosure regarding the phases of the data pipeline where human labor is involved?”

The report revealed that all models assessed received scores that the researchers deemed “unimpressive.” Meta’s Llama 2 language model achieved the highest score at 54 out of 100, whereas Amazon’s Titan model secured the lowest ranking with a score of 12 out of 100. OpenAI’s GPT-4 model received a score of 48 out of 100.

The team responsible for the Foundation Model Transparency Index research paper comprises primary author Rishi Bommasani, a PhD candidate in computer science at Stanford, along with Kevin Klyman, Shayne Longpre, Sayash Kapoor, Nestor Maslej, Betty Xiong, and Daniel Zhang.

Over the past three years, the shift towards reduced openness in major AI models stems from a range of factors, including competitive tensions among Big Tech companies and concerns about the potential risks associated with AI. Notably, employees at OpenAI have reconsidered the company’s earlier open approach to AI, highlighting the potential dangers of spreading the technology.