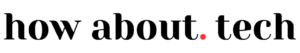

Nvidia has introduced the HGX H200 Tensor Core GPU, leveraging the Hopper architecture to enhance the acceleration of AI applications. This follows the release of the H100 GPU last year, which was previously Nvidia’s most potent AI GPU chip. The widespread deployment of the HGX H200 holds the potential to empower significantly more robust AI models, resulting in faster response times for existing ones, such as ChatGPT, in the foreseeable future.

Data center GPUs like this are generally not designed for graphics. GPUs excel in AI applications due to their ability to execute extensive parallel matrix multiplications, a crucial element for the functioning of neural networks. They play a vital role in both the training phase of constructing an AI model and the “inference” phase, where users input data into an AI model and receive results in return.

In the press release, Ian Buck, vice president of hyperscale and HPC at Nvidia said that “To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory”. “With Nvidia H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

Experts assert that a significant obstacle to AI progress in the past year has been the shortage of computing power, commonly referred to as “compute.” This scarcity has impeded the deployment of existing AI models and decelerated the development of new ones, with the primary culprit being a deficit of potent GPUs that accelerate AI models. While one solution involves increasing chip production, another effective approach is enhancing the power of AI chips. This second strategy could position the H200 as an appealing product for cloud providers, offering a potential remedy to the compute bottleneck.

Take OpenAI, for instance; they’ve consistently mentioned their shortage of GPU resources, leading to performance slowdowns in ChatGPT. To continue offering any service, the company resorts to rate limiting. In theory, incorporating the H200 could potentially provide the current AI language models, powering ChatGPT, with additional resources, allowing for improved service to a larger customer base.

Nvidia claims that the H200 is the first GPU featuring HBM3e memory. With the inclusion of HBM3e, the H200 boasts 141GB of memory and an impressive bandwidth of 4.8 terabytes per second. This, according to Nvidia, is 2.4 times the memory bandwidth offered by the Nvidia A100, which was launched in 2020 and remains in high demand despite its age, owing to shortages of more potent chips.

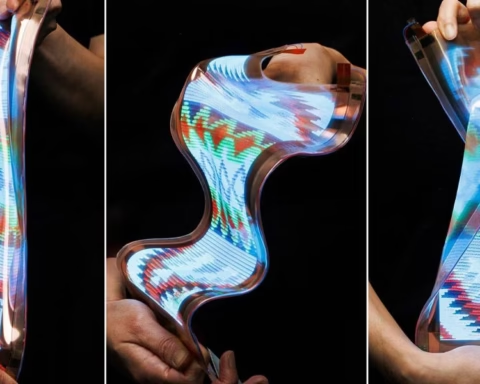

Nvidia is set to release the H200 in various form factors. This encompasses Nvidia HGX H200 server boards in configurations of four- and eight-ways, designed to be compatible with both hardware and software found in HGX H100 systems. Additionally, it will be offered in the Nvidia GH200 Grace Hopper Superchip, a unique package integrating both a CPU and GPU for an extra boost in AI performance.

Beginning next year, Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are set to be the inaugural cloud service providers to implement H200-based instances. Nvidia has announced that the H200 will be accessible from Q2 2024 onwards, through both global system manufacturers and various cloud service providers.