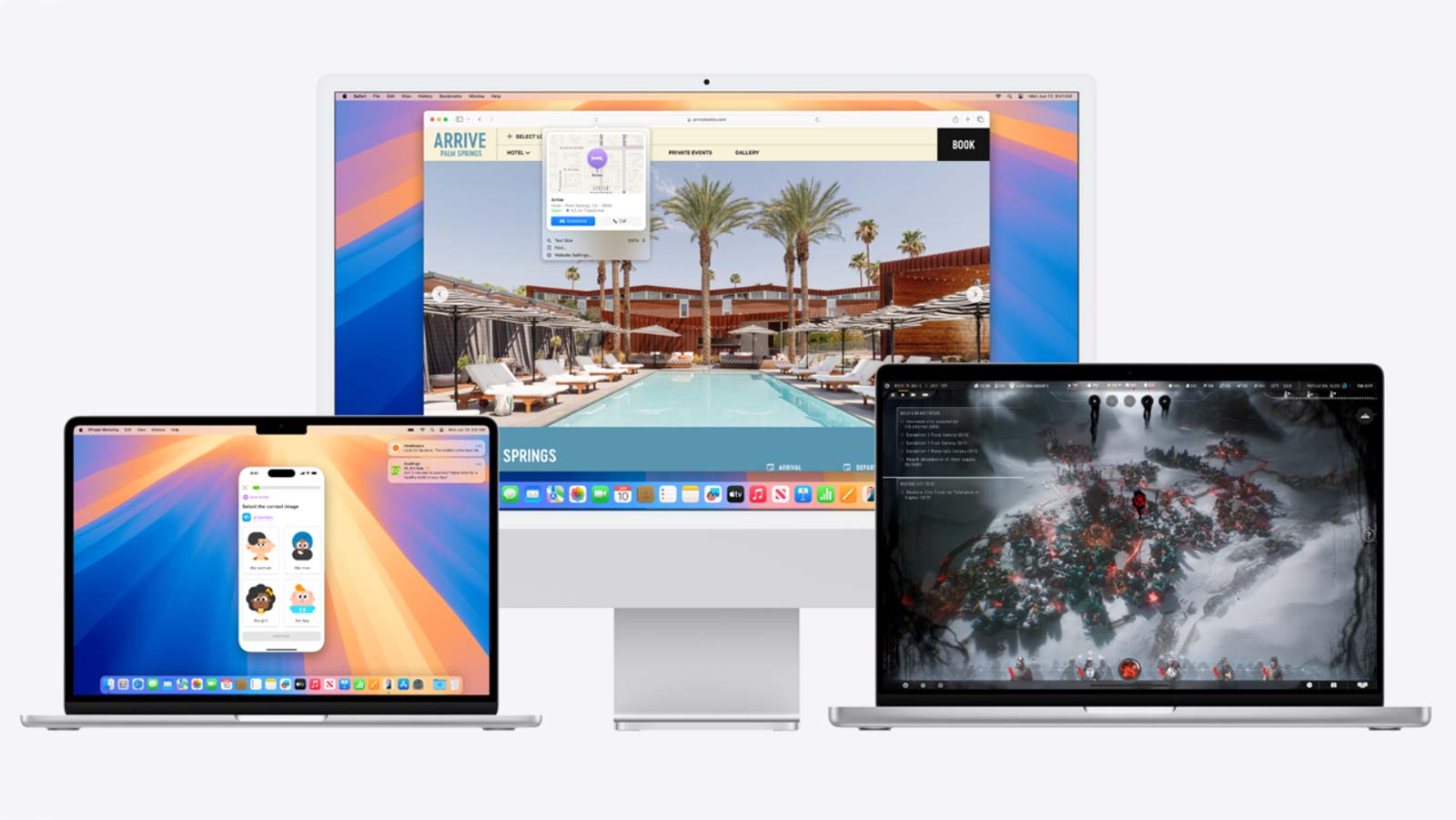

As Apple prepares to launch its new “Apple Intelligence” features, a closer look at the underlying code reveals the company’s careful approach to implementing generative AI technology.

Leaked files from the macOS Sequoia beta show a series of plaintext JSON prompts that aim to guide the behavior of Apple’s large language model (LLM)-powered AI assistant. These prompts demonstrate Apple’s concerted effort to prevent the kinds of issues that have plagued other generative AI chatbots, such as hallucinations, factual inaccuracies, and security vulnerabilities.

The prompts cover a range of use cases, from summarizing texts to providing smart replies for emails. Many of them emphasize the need for the AI to remain factual, concise, and within the boundaries of its defined role.

Phrases like “Do not hallucinate” and “Do not make up factual information” indicate Apple’s determination to keep the AI grounded in reality.

The presence of some minor grammatical errors in the prompts also serves as a reminder that Apple Intelligence is still a work in progress. The company is clearly taking a measured approach, testing and refining the system before its public debut later this year.

Unlike the rapid rollout of Bing Chat earlier this year, which faced backlash for its unpredictable behavior, Apple appears to be investing significant effort into ensuring its generative AI features are reliable and safe for consumer use. The accessibility of these prompts in the macOS Sequoia beta also suggests a level of transparency that could allow users and researchers to better understand the system’s inner workings.

As the release of iOS 18, iPadOS 18, and macOS 15 approaches, Apple’s generative AI capabilities may not be fully integrated into the initial launch. However, the company’s cautious approach could pay dividends in the long run, as it aims to deliver a seamless and trustworthy AI experience to its users.